🚀 Your Algo Edge Just Leveled Up — Premium Plans Are Here!🚀

A year in, our Starter, Pro, and Elite Quant Plans are crushing it—members are live-trading bots and booking 1-on-1 wins. Now with annual + lifetime deals for max savings.

Every premium member gets: ✅ Full code from every article ✅ Private GitHub repos + templates ✅ 3–5 deep-dive paid articles/mo ✅ Early access + live strategy teardowns

Pick your edge:

Starter (€20/mo) → 1 paid article + public repos

Builder (€30/mo) → Full code + private repos (most popular)

Master (€50/mo) → Two 1-on-1 calls + custom bot built for you

Best deals: 📅 Annual: 2 months FREE 🔒 Lifetime: Own it forever + exclusive perks

First 50 annual/lifetime signups get a free 15-min audit. Don’t wait—the market won’t.

— AlgoEdge Insights Team

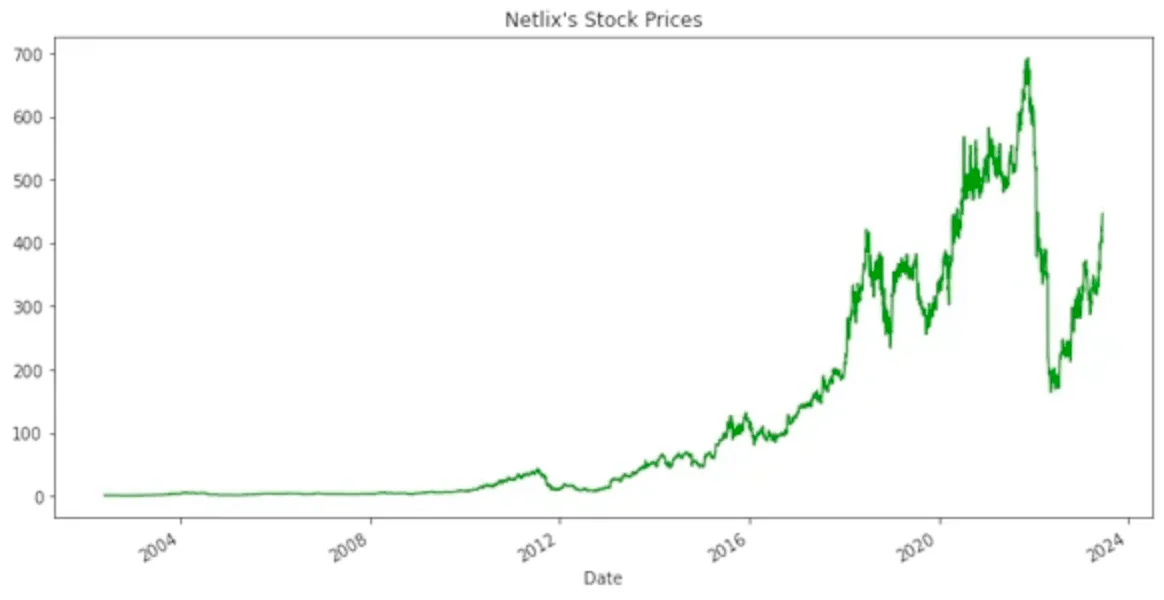

I prototyped an open‑source “Layered‑Memory Trader” (LMT) that lets three specialised agents; short‑term, swing, and macro — debate a trade, weigh their confidence, and act only when they’re in solid agreement. No real capital is at risk in this article; results are limited to back‑tests, so treat everything here as research, not investment advice. Inspired by Li et al.’s 2023 “TradingGPT” paper (CC BY 4.0).

Picture the 2 a.m. split‑brain moment every trader dreads. You’re bleary‑eyed in front of the charts, RSI screams oversold, the daily trend still slopes down, your gut whispers bounce, while your risk rules mutter “stay flat.” That tug‑of‑war, short‑term noise versus long‑term structure, hard metrics versus intuition, made me pause when I read the TradingGPT paper and thought, what if software could host that same internal debate, remember each argument, and act only when the voices finally agree? Chasing that idea down the rabbit hole led me to create a version from that paper — LMT, the Layered‑Memory Trader.

The full end-to-end workflow is available in a Google Colab notebook, exclusively for paid subscribers of my newsletter. Paid subscribers also gain access to the complete article, including the full code snippet in the Google Colab notebook, which is accessible below the paywall at the end of the article. Subscribe now to unlock these benefits!

A Team of Tireless Analysts in Software

Instead of forcing you to choose between day‑trading instincts and swing‑trading logic, LMT embraces the chaos. It spins up three “analyst” agents, each with its own specialty and memory buffer, then lets them hash it out:

Short‑Term Agent — Lives on minute‑level momentum, perfect for tight entries and exits.

Swing‑Term Agent — Tracks multi‑day structure to filter noise and reinforce — or veto — fast signals.

Macro Agent — Scans weeks‑to‑months of price action and piping in news sentiment for the bigger picture.

Only when a confidence‑weighted vote clears a threshold does LMT commit capital (well, simulated capital for now).

What You’ll See Next

Here’s the roadmap for the rest of the article. First, I’ll show how raw price feeds are transformed into indicator‑rich data frames; next, you’ll see how LMT keeps three lean yet informative memory buffers, one each for short‑term, swing, and macro context. From there, we’ll dive into the debate engine, where the agents cast weighted votes that determine whether to stay on the sidelines or pull the trigger. Finally, I’ll unpack the back‑test: equity curve, Sharpe ratio, drawdowns, and the missteps that surfaced. Whether you’re a quant hunting new architectures or a discretionary trader curious about AI, LMT offers a glimpse of where multi‑agent thinking can push trading systems. So let’s dive in.

1. System Overview

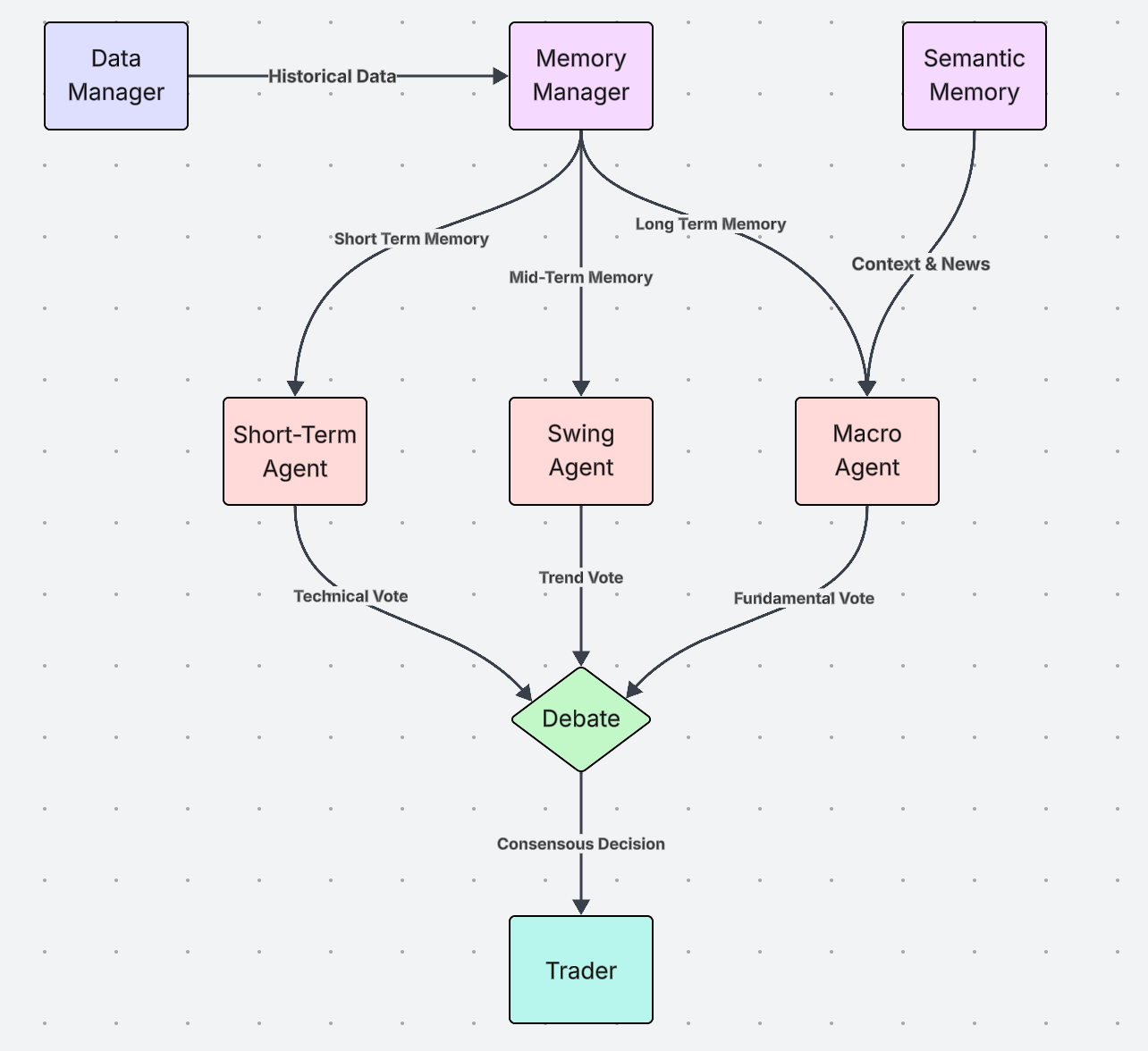

Figure 1. Layered‑Memory Trader architecture

LMT starts with a Data Manager that pulls raw candles and sentiment, then hands everything to a Memory Manager that slices the stream into three rolling buffers: short, swing, and macro.

Short‑Term Agent grabs the < 60‑minute buffer, hunts momentum, and casts a technical vote.

Swing Agent leans on multi‑day data for a trend vote.

Macro Agent reads weeks‑to‑months of price plus semantic‑news vectors for a fundamental vote.

Those three ballots flow into the Debate Engine, which runs a confidence‑weighted election. Only if the net conviction clears a configurable bar does the Trader module fire an order (simulated in our back‑tests).

1.1. Data Ingestion and Market Intelligence Gathering

Everything the three agents know about the market comes from one place, which is the DataManager. Its job is simple but critical. First, it loads historical candles (or live feeds later) for the tickers in scope. Second, it partitions the date range into train and test sets for back‑tests. Third, it exposes a function get_data_for_ticker(), which is a helper that each agent can call on demand. Below is the full class so you can paste it straight into your project.

from data.price_fetcher import fetch_price_data

from data.sentiment_fetcher import fetch_sentiment_data

import pandas as pd

import time

import yaml

class DataManager:

def __init__(self, config, backtest_mode=None):

self.config = config

self.backtest_mode = backtest_mode

self.all_historical_data = pd.DataFrame()

self.tickers = []

if self.backtest_mode:

self._load_backtest_data()

def _load_backtest_data(self):

"""Loads and prepares historical data for backtesting."""

# Try to load data with news first, fallback to regular data

news_data_path = self.config['backtest'].get('news_data_path', 'historical_data_with_news.csv')

data_path = self.config['backtest']['full_data_path']

try:

print(f"Attempting to load data with news from {news_data_path}...")

self.all_historical_data = pd.read_csv(

news_data_path,

parse_dates=['time']

)

print(f"Successfully loaded data with news from {news_data_path}")

except FileNotFoundError:

print(f"News data not found at {news_data_path}, loading regular data from {data_path}...")

self.all_historical_data = pd.read_csv(

data_path,

parse_dates=['time']

)

# Add placeholder news column if not present

if 'news_summary' not in self.all_historical_data.columns:

self.all_historical_data['news_summary'] = 'No significant news'

self.tickers = self.all_historical_data['ticker'].unique()

if self.backtest_mode == 'train':

start_date = pd.to_datetime(self.config['backtest']['training_period']['start'])

end_date = pd.to_datetime(self.config['backtest']['training_period']['end'])

elif self.backtest_mode == 'test':

start_date = pd.to_datetime(self.config['backtest']['testing_period']['start'])

end_date = pd.to_datetime(self.config['backtest']['testing_period']['end'])

else:

raise ValueError("Invalid backtest mode specified. Choose 'train' or 'test'.")

self.all_historical_data = self.all_historical_data[

(self.all_historical_data['time'] >= start_date) &

(self.all_historical_data['time'] <= end_date)

]

self.backtest_iterator = self.all_historical_data.groupby('ticker')

print(f"Historical data for {self.backtest_mode}ing loaded for tickers: {self.tickers}.")

# Check if news data is available

if 'news_summary' in self.all_historical_data.columns:

news_count = self.all_historical_data['news_summary'].notna().sum()

print(f"News data available: {news_count} records with news information")

else:

print("No news data available in the dataset")

def get_data_for_ticker(self, ticker):

"""Returns the historical data for a specific ticker."""

if self.backtest_mode:

try:

return self.backtest_iterator.get_group(ticker).set_index('time')

except KeyError:

return pd.DataFrame()

else:

# Live mode would fetch data for a specific ticker

return pd.DataFrame()

if __name__ == '__main__':

with open('config.yaml', 'r') as f:

config = yaml.safe_load(f)

data_manager = DataManager(config, backtest_mode='train')

aapl_data = data_manager.get_data_for_ticker('AAPL')

print("AAPL Data:")

print(aapl_data.head()) Once the raw candles are in memory, LMT augments them with a handful of momentum and volatility signals that every agent understands:

# Technical indicator enhancement

ticker_data['rsi'] = calculate_rsi(ticker_data)

ticker_data['macd'], ticker_data['macd_signal'] = calculate_macd(ticker_data)

ticker_data['upper_band'], ticker_data['lower_band'] = calculate_bollinger_bands(ticker_data)This enriched frame is what flows into the Memory Manager next, ensuring each agent sees not just price but also momentum context before casting its vote.

1.2. The Layered Memory

The heart of LMT lies in the MemoryManager class, which is a component that mimics how human traders naturally organize and recall market information. Human traders don’t treat every tick the same; they keep an intraday pulse, a swing view, and a long‑term thesis in separate mental buckets. LMT copies that habit with a MemoryManager that maintains three rolling buffers, plus a reflection log for post‑trade notes.

import pandas as pd

import yaml

class MemoryManager:

def __init__(self, horizons: dict):

self.horizons = horizons

self.short_term_memory = pd.DataFrame()

self.mid_term_memory = pd.DataFrame()

self.long_term_memory = pd.DataFrame()

self.reflection_memory = pd.DataFrame(columns=['timestamp', 'decision', 'confidence', 'outcome', 'reflection'])

def update_memory(self, new_data: pd.DataFrame):

"""

Updates all memory layers with new data and ensures they do not exceed their configured size.

:param new_data: A DataFrame containing the new data points to add.

"""

if new_data.empty:

return

# Update short-term memory

self.short_term_memory = pd.concat([self.short_term_memory, new_data])

if len(self.short_term_memory) > self.horizons['short_term']:

self.short_term_memory = self.short_term_memory.iloc[-self.horizons['short_term']:]

# Update mid-term memory

self.mid_term_memory = pd.concat([self.mid_term_memory, new_data])

if len(self.mid_term_memory) > self.horizons['mid_term']:

self.mid_term_memory = self.mid_term_memory.iloc[-self.horizons['mid_term']:]

# Update long-term memory

self.long_term_memory = pd.concat([self.long_term_memory, new_data]).tail(self.horizons['long_term'])

def add_reflection(self, timestamp, decision, confidence, outcome, reflection):

new_reflection = pd.DataFrame({

'timestamp': [timestamp],

'decision': [decision],

'confidence': [confidence],

'outcome': [outcome],

'reflection': [reflection]

})

self.reflection_memory = pd.concat([self.reflection_memory, new_reflection], ignore_index=True)

def get_memory_snapshot(self) -> dict:

"""

Returns a dictionary containing the current state of all memory layers.

"""

return {

'short_term': self.short_term_memory,

'mid_term': self.mid_term_memory,

'long_term': self.long_term_memory,

'reflections': self.reflection_memory

}How the three layers work in practice:

Short‑term memory holds the freshest ticks — price bursts, volume spikes, momentum shifts — so the intraday agent can fire quickly without being drowned by old data.

Mid‑term memory keeps several days to a few weeks, letting the swing agent spot trend continuations, pullbacks, or emerging ranges that a 200‑bar window would miss.

Long‑term memory stretches back months, giving the macro agent enough context to judge whether the current action is a blip or a regime change.

LMT avoids the classic pitfall of information overload while still preserving the context each agent truly needs, that is, if it’s capping each buffer to its horizon. In other words, every analyst sees exactly the slice of market history that matches its job description; no more, no less.

1.3. The Specialized Agent Team: Different Minds, Common Goal

Once the market stream is bucketed into three memories, LMT assigns a dedicated analyst to each horizon. Every agent inherits from the same BaseAgent interface; so they share logging, risk limits, and config parsing, but each brings its own prompt template and voting logic.

from abc import ABC, abstractmethod

import pandas as pd

from memory.semantic_memory import SemanticMemory

class BaseAgent(ABC):

"""

Abstract base class for all trading agents.

"""

def __init__(self, name: str, config: dict, semantic_memory: SemanticMemory):

"""

Initializes the agent.

:param name: The name of the agent (e.g., "Short-Term Agent").

:param config: A configuration dictionary.

:param semantic_memory: An instance of SemanticMemory for searching textual data.

"""

self.name = name

self.config = config

self.semantic_memory = semantic_memoryShort-Term Agent: This agent thinks like a scalper. It scans the most recent few hundred bars for breakouts and momentum shifts, then asks a lightweight LLM prompt for a binary buy / sell / hold with confidence. High speed, high noise, high conviction.

import pandas as pd

from agents.base_agent import BaseAgent

from memory.semantic_memory import SemanticMemory

import google.generativeai as genai

import os

import re

genai.configure(api_key=os.getenv("GEMINI_API_KEY"))

class ShortTermAgent(BaseAgent):

"""

Agent focusing on short-term data to make trading decisions, using an LLM for analysis.

"""

def __init__(self, name: str, config: dict, semantic_memory: SemanticMemory):

super().__init__(name, config, semantic_memory)

self.model = genai.GenerativeModel('gemini-1.5-flash')

def vote(self, memory_snapshot: dict) -> tuple[str, float]:

"""

Analyzes short-term memory using an LLM to decide on a trading action.

"""

short_term_data = memory_snapshot.get('short_term')

if short_term_data is None or short_term_data.empty:

return 'HOLD', 0.5

ticker = short_term_data['ticker'].iloc[-1]

# Prepare the Prompt

prompt = f"You are a short-term momentum trader specializing in {ticker}. Based on the recent price action and technical indicators, what is your recommendation? Provide your answer as 'VOTE: [BUY/SELL/HOLD], CONFIDENCE: [0.0-1.0]'.\n\n"

prompt += f"Short-Term Price & Indicator Data for {ticker} (last 10 data points):\n"

prompt += short_term_data[['close', 'rsi', 'macd', 'upper_band', 'lower_band']].tail(10).to_string() + "\n"

# Get LLM Response

try:

response = self.model.generate_content(prompt)

# Parse the Response

vote_match = re.search(r"VOTE:\s*(BUY|SELL|HOLD)", response.text, re.IGNORECASE)

confidence_match = re.search(r"CONFIDENCE:\s*([0-9.]+)", response.text, re.IGNORECASE)

if vote_match and confidence_match:

vote = vote_match.group(1).upper()

confidence = float(confidence_match.group(1))

print(f"ShortTermAgent LLM Vote: {vote}, Confidence: {confidence}")

return vote, confidence

else:

print(f"ShortTermAgent: Could not parse LLM response: {response.text}")

return 'HOLD', 0.5

except Exception as e:

print(f"An error occurred while calling the Gemini API: {e}")

return 'HOLD', 0.5Mid-Term Agent: This agent lives in the middle. It pulls the mid‑term buffer, days to weeks, calculates moving‑average crosses, RSI divergences, and checks if the short‑term call fits the broader pattern. Its vote often moderates the scalper’s enthusiasm.

import pandas as pd

from agents.base_agent import BaseAgent

from memory.semantic_memory import SemanticMemory

import google.generativeai as genai

import os

import re

genai.configure(api_key=os.getenv("GEMINI_API_KEY"))

class MidTermAgent(BaseAgent):

"""

Agent focusing on mid-term data to make trading decisions, using an LLM for analysis.

"""

def __init__(self, name: str, config: dict, semantic_memory: SemanticMemory):

super().__init__(name, config, semantic_memory)

self.model = genai.GenerativeModel('gemini-1.5-flash')

def vote(self, memory_snapshot: dict) -> tuple[str, float]:

"""

Analyzes mid-term memory using an LLM to decide on a trading action.

"""

mid_term_data = memory_snapshot.get('mid_term')

if mid_term_data is None or mid_term_data.empty or len(mid_term_data) < 20:

return 'HOLD', 0.5

ticker = mid_term_data['ticker'].iloc[-1]

# Prepare the Prompt

prompt = f"You are a mid-term trend analyst specializing in {ticker}. Based on the following price data and technical indicators, what is your recommendation? Provide your answer as 'VOTE: [BUY/SELL/HOLD], CONFIDENCE: [0.0-1.0]'.\n\n"

# Add mid-term price trend with moving averages and RSI

prompt += f"Mid-Term Price & Indicator Data for {ticker} (last 20 data points):\n"

prompt += mid_term_data[['close', 'rsi', 'macd', 'upper_band', 'lower_band']].tail(20).to_string() + "\n\n"

prompt += "5-day Moving Average:\n"

prompt += mid_term_data['close'].rolling(window=5).mean().tail().to_string() + "\n\n"

prompt += "20-day Moving Average:\n"

prompt += mid_term_data['close'].rolling(window=20).mean().tail().to_string() + "\n"

# Get LLM Response

try:

response = self.model.generate_content(prompt)

# Parse the Response

vote_match = re.search(r"VOTE:\s*(BUY|SELL|HOLD)", response.text, re.IGNORECASE)

confidence_match = re.search(r"CONFIDENCE:\s*([0-9.]+)", response.text, re.IGNORECASE)

if vote_match and confidence_match:

vote = vote_match.group(1).upper()

confidence = float(confidence_match.group(1))

print(f"MidTermAgent LLM Vote: {vote}, Confidence: {confidence}")

return vote, confidence

else:

print(f"MidTermAgent: Could not parse LLM response: {response.text}")

return 'HOLD', 0.5

except Exception as e:

print(f"An error occurred while calling the Gemini API: {e}")

return 'HOLD', 0.5Macro (Long-Term) Agent: This agent is the strategist. It reads months of price action plus a semantic search over recent news, Fed statements, or earnings chatter stored in the SemanticMemory class. If the macro view screams “risk‑off,” it can overrule the other two by delivering a strong, opposite‑direction vote.

import pandas as pd

from agents.base_agent import BaseAgent

from memory.semantic_memory import SemanticMemory

import google.generativeai as genai

import os

import re

genai.configure(api_key=os.getenv("GEMINI_API_KEY"))

class LongTermAgent(BaseAgent):

"""

Agent focusing on long-term data and macroeconomic trends, using an LLM for analysis.

"""

def __init__(self, name: str, config: dict, semantic_memory: SemanticMemory):

super().__init__(name, config, semantic_memory)

self.model = genai.GenerativeModel('gemini-1.5-flash')

def vote(self, memory_snapshot: dict) -> tuple[str, float]:

"""

Analyzes long-term memory and semantic context using an LLM to decide on a trading action.

"""

long_term_data = memory_snapshot.get('long_term')

if long_term_data is None or long_term_data.empty:

return 'HOLD', 0.5

ticker = long_term_data['ticker'].iloc[-1]

# Prepare the Prompt

prompt = f"You are a long-term trading analyst specializing in {ticker}. Based on the following data, what is your recommendation? Provide your answer as 'VOTE: [BUY/SELL/HOLD], CONFIDENCE: [0.0-1.0]'.\n\n"

# Add long-term price trend

prompt += f"Long-Term Price & Indicator Data for {ticker} (last 10 data points):\n"

prompt += long_term_data[['close', 'rsi', 'macd', 'upper_band', 'lower_band']].tail(10).to_string() + "\n\n"

# Add semantic memory context

try:

semantic_results = self.semantic_memory.search_memory("market sentiment", k=3)

if semantic_results:

prompt += "Recent News & Reflections:\n"

for result in semantic_results:

prompt += f"- {result['text']} (distance: {result['distance']:.2f})\n"

except (IndexError, ValueError):

# Not enough memories to search or other value error

prompt += "No significant news or reflections found.\n"

# Get LLM Response

try:

response = self.model.generate_content(prompt)

# Parse the Response

vote_match = re.search(r"VOTE:\s*(BUY|SELL|HOLD)", response.text, re.IGNORECASE)

confidence_match = re.search(r"CONFIDENCE:\s*([0-9.]+)", response.text, re.IGNORECASE)

if vote_match and confidence_match:

vote = vote_match.group(1).upper()

confidence = float(confidence_match.group(1))

print(f"LongTermAgent LLM Vote: {vote}, Confidence: {confidence}")

return vote, confidence

else:

print(f"LongTermAgent: Could not parse LLM response: {response.text}")

return 'HOLD', 0.5

except Exception as e:

print(f"An error occurred while calling the Gemini API: {e}")

return 'HOLD', 0.5Because all three agents return a (vote, confidence) pair, the Debate Engine can treat them symmetrically, weighting each by conviction, spotting deadlocks, and opting to stand aside when consensus is weak. That diversity of thought, not raw model size, is what gives LMT its edge.

1.4. Debate: turning three opinions into one trade

After each agent casts a (vote, confidence) pair, LMT runs a confidence‑weighted election rather than a simple majority. The logic mirrors an institutional trading desk: louder voices only matter if they’re also more certain.

from typing import List, Tuple

from agents.base_agent import BaseAgent

class Debate:

"""

Orchestrates a debate among trading agents to reach a consensus.

"""

def __init__(self, agents: List[BaseAgent]):

self.agents = agents

def run(self, memory_snapshot: dict) -> Tuple[str, float, List[dict]]:

"""

Gathers votes from all agents and determines the final decision using confidence weighting.

"""

votes = []

for agent in self.agents:

decision, confidence = agent.vote(memory_snapshot)

votes.append({'agent': agent.name, 'decision': decision, 'confidence': confidence})

final_decision, final_confidence = self._resolve_votes_with_weighting(votes)

return final_decision, final_confidence, votes

def _resolve_votes_with_weighting(self, votes: List[dict]) -> Tuple[str, float]:

"""

Resolves the collected votes into a single decision using confidence as a weight.

"""

if not votes:

return 'HOLD', 0.5

buy_strength = sum(v['confidence'] for v in votes if v['decision'] == 'BUY')

sell_strength = sum(v['confidence'] for v in votes if v['decision'] == 'SELL')

# Normalize by the sum of all confidences to get a weighted average

total_confidence = sum(v['confidence'] for v in votes)

if total_confidence == 0:

return 'HOLD', 0.0

# The final confidence is the difference between buy and sell strength, normalized

net_strength = buy_strength - sell_strength

final_confidence = abs(net_strength) / total_confidence

if net_strength > 0:

return 'BUY', final_confidence

elif net_strength < 0:

return 'SELL', final_confidence

else:

return 'HOLD', 1.0 - final_confidence # Confidence in HOLD is inverse of convictionFor instance, imagine the short‑term agent comes in hot with BUY (0.9), the swing agent counters with SELL (0.4), and the macro agent opts for HOLD (0.2).

When the Debate engine sums the weighted opinions, buy strength is 0.9 and sell strength is 0.4, giving a net of 0.5. Dividing that by the total confidence (0.9 + 0.4 = 1.3) yields an overall confidence of roughly 0.56, so the system’s verdict is BUY at 56 % conviction.

Crucially, if that 0.56 score falls below the mean_confidence_to_act threshold set in the config, LMT simply stands aside; no trade, no churn. In this way, the debate layer functions as built‑in risk control, throttling impulsive signals and ensuring capital only moves when the collective conviction is truly solid.

1.5. Backtesting Framework and Performance Analysis

The full end-to-end workflow is available in a Google Colab notebook, exclusively for paid subscribers of my newsletter. Paid subscribers also gain access to the complete article, including the full code snippet in the Google Colab notebook, which is accessible below the paywall at the end of the article. Subscribe now to unlock these benefits!

Subscribe to our premium content to read the rest.

Become a paying subscriber to get access to this post and other subscriber-only content.

Upgrade